In a research building in the heart of UConn’s Storrs campus, assistant professor Ashwin Dani is teaching a life-size industrial robot how to think.

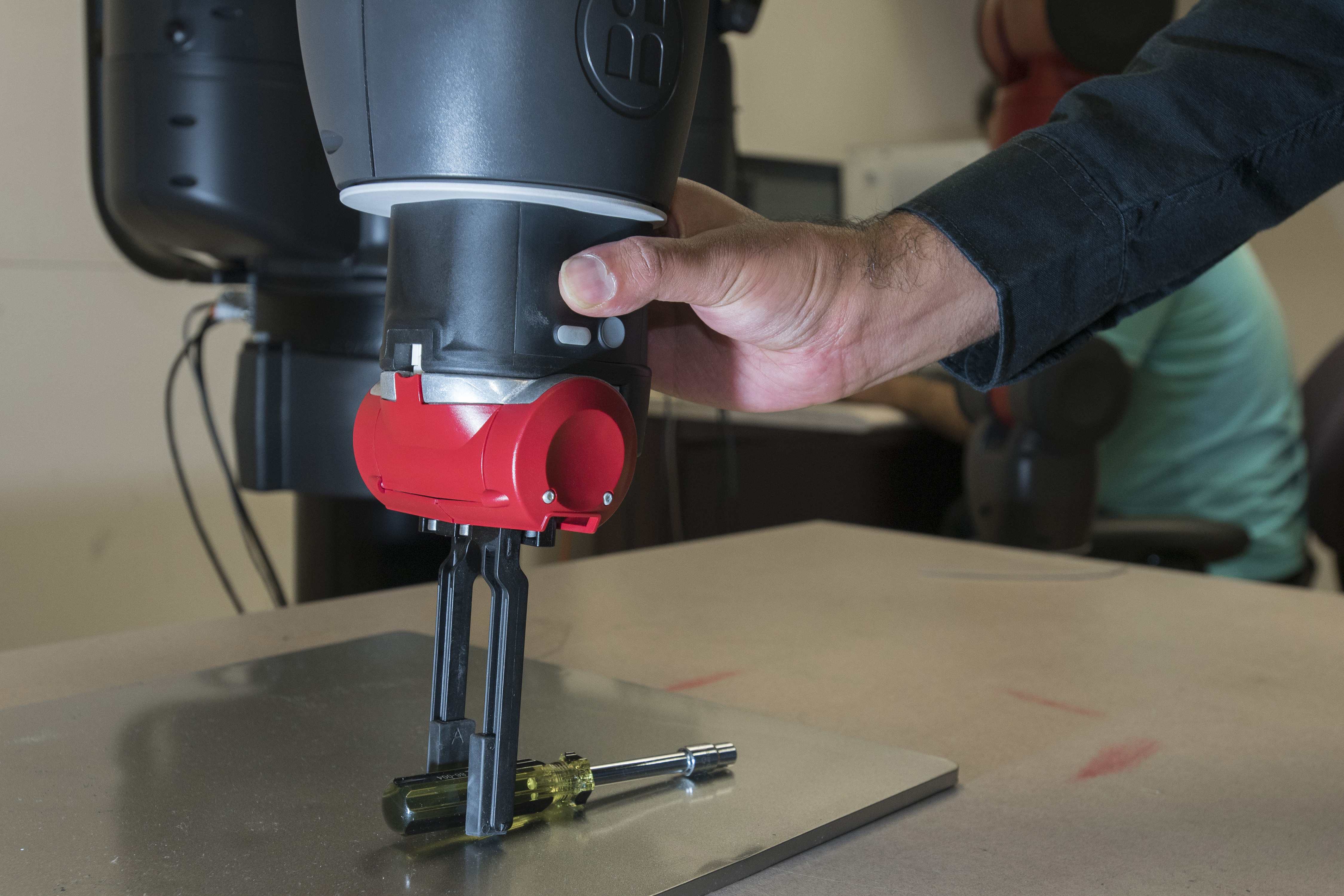

Here, on a recent day inside the University’s Robotics and Controls Lab, Dani and a small team of graduate students are showing the humanoid bot how to assemble a simple desk drawer.

The “eyes” on the robot’s face screen look on as two students build the wooden drawer, reaching for different tools on a tabletop as they work together to complete the task.

The robot may not appear intently engaged. But it isn’t missing a thing – or at least that’s what the scientists hope. For inside the robot’s circuitry, its processors are capturing and cataloging all of the humans’ movements through an advanced camera lens and motion sensors embedded into his metallic frame.

Ultimately, the UConn scientists hope to develop software that will teach industrial robots how to use their sensory inputs to quickly “learn” the various steps for a manufacturing task – such as assembling a drawer or a circuit board – simply by watching their human counterparts do it first.

“We’re trying to move toward human intelligence,” says Dani, the lab’s director and a faculty member in the School of Engineering. “We’re still far from what we want to achieve, but we’re definitely making robots smarter.”

To further enhance robotic intelligence, the UConn team is also working on a series of complex algorithms that will serve as an artificial neural network for the machines, helping robots apply what they see and learn so they can one day assist humans at their jobs, such as assembling pieces of furniture or installing parts on a factory floor. If the process works as intended, these bots, in time, will know an assembly sequence so well, they will be able to anticipate their human partner’s needs and pick up the right tools without being asked – even if the tools are not in the same location as they were when the robots were trained.

This kind of futuristic human-robot interaction – called collaborative robotics – is transforming manufacturing. Industrial robots like the one in Dani’s lab already exist. Although currently, engineers must write intricate computer code for all of the robot’s individual movements or manually adjust the robot’s limbs at each step in a process to program it to perform. Teaching industrial robots to learn manufacturing techniques simply by observing could reduce to minutes a process that currently can take engineers days.

“Here at UConn, we’re developing algorithms that are designed to make robot programming easier and more adaptable,” says Dani. “We are essentially building software that allows a robot to watch these different steps and, through the algorithms we’ve developed, predict what will happen next. If the robot sees the first two or three steps, it can tell us what the next 10 steps are. At that point, it’s basically thinking on its own.”

In recognition of this transformative research, UConn’s Robotics and Controls Lab was recently chosen as one of 40 academic or academic-affiliated research labs supporting the U.S. government’s newly created Advanced Robotics for Manufacturing Institute or ARM. One of the collaborative’s primary goals is to advance robotics and artificial intelligence to maintain American manufacturing competitiveness in the global economy.

“There is a huge need for collaborative robotics in industry,” says Dani. “With advances in artificial intelligence, lots of major companies like United Technologies, Boeing, BMW, and many small and mid-size manufacturers, are moving in this direction.”

The United Technologies Research Center, UTC Aerospace Systems, and ABB US Corporate Research – a leading international supplier of industrial robots and robot software – are also representing Connecticut as part of the new ARM Institute. The institute is led by American Robotics Inc., a nonprofit associated with Carnegie Mellon University.

Connecticut’s and UConn’s contribution to the initiative will be targeted toward advancing robotics in the aerospace and shipbuilding industries, where intelligent, adaptable robots are more in demand because of the industries’ specialized needs.

Joining Dani on the ARM project are UConn Board of Trustees Distinguished Professor Krishna Pattipati, the University’s UTC Professor in Systems Engineering and an expert in smart manufacturing; and assistant professor Liang Zhang, an expert in production systems engineering.

“Robotics, with wide-ranging applications in manufacturing and defense, is a relatively new thrust area for the Department of Electrical and Computer Engineering,” says Rajeev Bansal, professor and head of UConn’s electrical and computer engineering department. “Interestingly, our first two faculty hires in the field received their doctorates in mechanical engineering, reflecting the interdisciplinary nature of robotics. With the establishment of the new national Advanced Robotics Manufacturing Institute, both UConn and the ECE department are poised to play a leadership role in this exciting field.”

The aerospace, automotive, and electronics industries are expected to represent 75 percent of all robots used in the country by 2025. One of the goals of the ARM initiative is to increase small manufacturers’ use of robots by 500 percent.

Industrial robots have come a long way since they were first introduced, says Dani, who has worked with some of the country’s leading researchers in learning and adoptive control, and robotics at the University of Florida (Warren Dixon) and the University of Illinois at Urbana-Champaign (Seth Hutchinson and Soon-Jo Chung). Many of the first factory robots were blind, rudimentary machines that were kept in cages and considered a potential danger to workers as their powerful hydraulic arms whipped back and forth on the assembly line.

Today’s advanced industrial robots are designed to be human-friendly. High-end cameras and elaborate motion sensors allow these robots to “see” and “sense” movement in their environment. Some manufacturers, like Boeing and BMW, already have robots and humans working side-by-side.

Of course, one of the biggest concerns within collaborative robotics is safety.

In response to those concerns, Dani’s team is developing algorithms that will allow industrial robots to quickly process what they see and adjust their movements accordingly when unexpected obstacles – like a human hand – get in their way.

“Traditional robots were very heavy, moved very fast, and were very dangerous,” says Dani. “They were made to do a very specific task, like pick up an object and move it from here to there. But with recent advances in artificial intelligence, machine learning, and improvements in cameras and sensors, working in close proximity with robots is becoming more and more possible.”

Dani acknowledges the obstacles in his field are formidable. Even with advanced optics, smart industrial robots need to be taught how to distinguish a metal rod from a flexible piece of wiring, and to understand the different physics inherent in each.

Movements that humans take for granted are huge engineering challenges in Dani’s lab. For instance: Inserting a metal rod into a pre-drilled hole is relatively easy. Knowing how to pick up a flexible cable and plug it into a receptacle is another challenge altogether. If the robot grabs the cable too far away from the plug, it will likely flex and bend. Even if the robot grabs the cable properly, it must not only bring the plug to the receptacle but also make sure the plug is oriented properly so it matches the receptacle precisely.

“Perception is always a challenging problem in robotics,” says Dani. “In artificial intelligence, we are essentially teaching the robot to process the different physical phenomena it observes, make sense out of what it sees, and then make the appropriate response.”

Research in UConn’s Robotics and Controls Lab is supported by funding from the U.S. Department of Defense and the UTC Institute of Advanced Systems Engineering. More detailed information about this research being conducted at UConn, including peer-reviewed article citations documenting the research, can be found here. Dani and graduate student Harish Ravichandar also have two patents pending on aspects of this research: “Early Prediction of an Intention of a User’s Actions,” Serial #15/659,827, and “Skill Transfer From a Person to a Robot,” Serial #15/659,881.