Infants are more likely to learn from on-screen instruction when paired with another infant as opposed to viewing the lesson alone, according to a new study.

You read that correctly: Infants.

Researchers at the University of Connecticut and University of Washington looked at the mechanisms involved in language learning among nine-month-olds, the youngest population known to be studied in relation to on-screen learning.

They found neural evidence of early learning among infants who were coupled with a peer, as compared to those infants who viewed the instruction alone. Critically, the more often that new, unfamiliar, partners were paired with the infants, the better results the babies showed.

“Novelty increased learning,” says Patricia K. Kuhl, Bezos Family Foundation Endowed Chair in Early Childhood Learning at the University of Washington. “What this study introduces for the first time is that part of the reason we learn better when we learn collaboratively is that a social partner increases arousal, and arousal in turn increases learning. Social partners not only provide information by showing us how to do things, but also provide motivation for learning.”

Kuhl worked with Adrian Garcia-Sierra, assistant professor of speech, language, and hearing sciences at UConn; and Sarah Roseberry Lytle, director of outreach and education, at the Institute for Learning & Brain Sciences, University of Washington.

Their paper, “Two Are Better Than One: Infant Language Learning From Video Improves in the Presence of Peers,” appears this month in the Proceedings of the National Academy of Sciences (PNAS).

The pattern of neural response exhibited by infants in the paired-exposure setting was indicative of more mature brain processing of the sounds, write the researchers, and it is likely that they represent the earliest stages of infants’ sound learning.

The findings support previous research highlighting the importance of social interaction for children’s learning, especially from screen media.

While previous studies have confirmed that children’s language learning is better from live humans than from screens, other studies have built on that work to suggest that the screen itself may not be the issue, but the lack of interactivity.

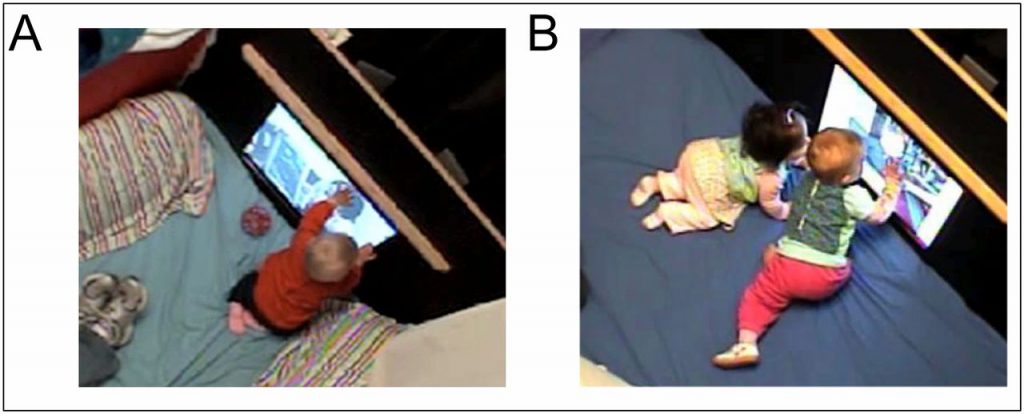

Thirty-one infants were enrolled in the study and randomly assigned to individual or paired conditions. The researchers put infants in control of their video-viewing experience with touchscreen technology, and the infants were “quick to learn” that they had to touch the screen to activate a video, the researchers note.

The results revealed brain-based evidence of immature phonemic learning in infants in the individual settings, while evidence of more mature learning emerged from infants in the paired sessions.

Critically, the differences could not be attributed to the amount of exposure time, the number of videos viewed, touches to the touchscreen, or infants’ motor ability, the researchers say.

The study was supported by the National Science Foundation and The Ready Mind Project.