A cloudy day can ruin a trip to the beach, a scenic picnic, and lots of other outdoor activities.

But clouds in satellite imagery are also a big issue for remote sensing and land change scientists.

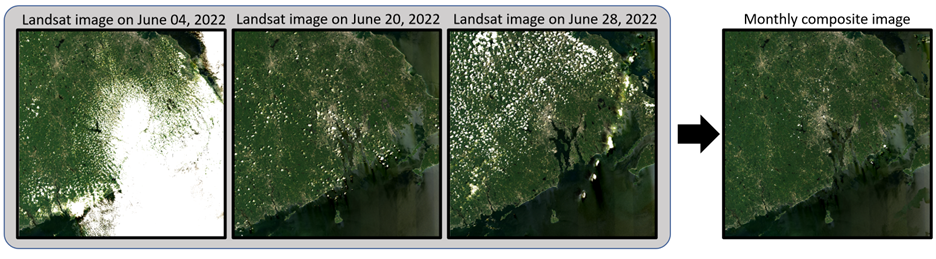

When scientists want to study how land surface is changing, they often use composite images consisting of multiple satellite images of the same place to create a representative “snapshot” of what is going on. But a single cloud or even a cloud’s shadow can ruin an image because it blocks the view of the land scientists are trying to study, leaving huge holes in the data.

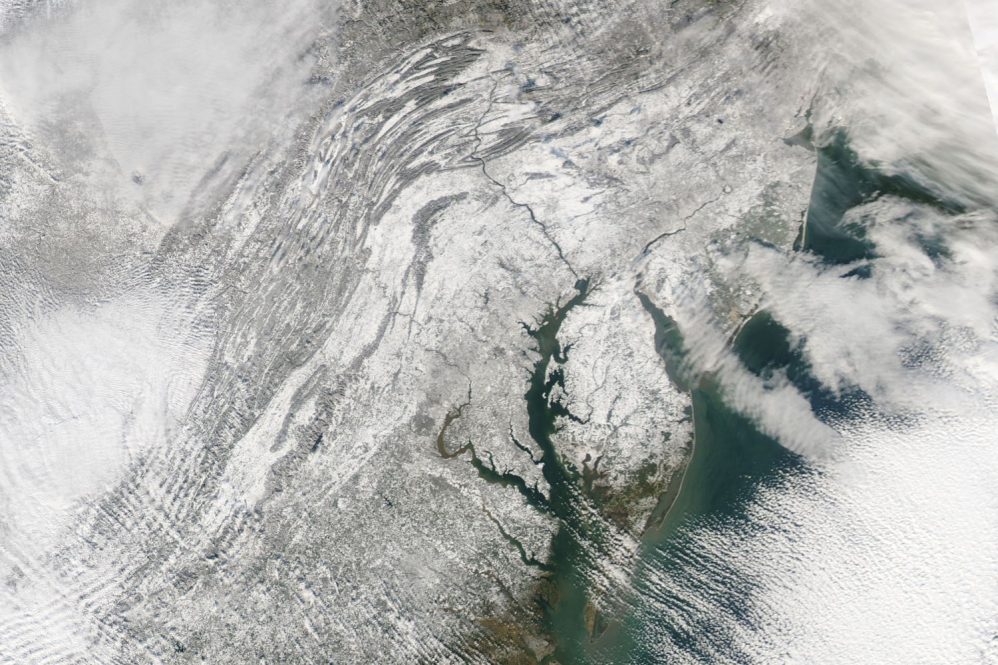

Globally, about 60% of all images captured by satellites contain cloud cover, making this a major issue for land change scientists.

This has led scientists to develop various algorithms to sort through satellite images and remove those with clouds to create a clear, usable composite image.

Two UConn researchers in the Department of Natural Resources and the Environment (CAHNR) created a new algorithm for image compositing, as well as a framework for evaluating all other approaches. Shi Qiu, research assistant professor, and Zhe Zhu, assistant professor and director of the Global Environmental Remote Sensing Laboratory (GERS), recently published this work in Remote Sensing of Environment.

“If you don’t fill the holes in the data, the result will not be usable for a lot of people,” Zhu says. “It is the fundamental step if you want to do any kind of remote sensing analysis. You want to have a cloud-free image.”

Zhu and Qiu demonstrated that their new algorithm is the best method for creating composite images when looking at a short, month-long, timespan. In general, with remote sensing, a shorter timespan will provide a more accurate picture of how the land is changing, unless, for example, every image from a given month is filled with clouds or snow.

Zhu and Qiu’s algorithm uses a ratio index of two spectral bands to select the “best” observation from many candidate observations collected for the same location to fill in the data gaps created by cloud cover.

Their algorithm is also unique because they use surface reflectance in the original data set to detect and compensate for cloud cover. Some of the other algorithms rely on separate data sets of top-of-atmosphere reflectance images, which often require extra data downloading/preprocessing.

“The algorithm we developed is a very simple algorithm, but sometimes simple is best” Qiu says.

In the paper, the authors also evaluated their algorithm with nine other existing methods for filling in data holes. They provide a framework to evaluate any given method to determine which to use depending on what is being measured.

They evaluated these methods by comparing the composite image created by each to a cloud-free image. They hid the cloud-free image from the compositing method, so it was not incorporated into the finale image. They were then able to evaluate how closely the algorithm matched the hidden image in terms of spectral, spatial, and application fidelity.

The researchers specifically chose areas that would have an observable change caused by events like forest harvesting, fires, agriculture, or urban development. This allowed them to accurately evaluate which algorithms were most useful for studying land change.

This framework provides the field of remote sensing analysis with a powerful tool. Zhu says he is already teaching his students to use the framework, as evaluating which method to use is the critical first step to successfully creating the composite images they will need to conduct further analysis.

“We are providing a community service for the people who want to do image processing for image compositing so there is guidance, a good practice for people to use,” Zhu says.

This work relates to CAHNR’s Strategic Vision area focused on Fostering Sustainable Landscapes at the Urban-Rural Interface.

Follow UConn CAHNR on social media