An estimated 37 million mammograms are performed in the United States each year. The rate of abnormalities detected is low – about 2%. Still, the prevalence of false positives is an ongoing challenge for radiologists using current computer aided detection (CAD) technology, an issue that increases the cost of health care and causes needless anxiety for patients.

Two UConn researchers have developed a new, artificial intelligence (AI)-enabled deep-learning approach to breast cancer detection that takes aim at that problem. The Feature Fusion Siamese Network for Breast Cancer Detection developed by Dr. Clifford Yang, an Associate Professor of Radiology at UConn School of Medicine, and Sheida Nabavi, an Associate Professor at UConn’s Department of Computer Science and Engineering, analyzes mammograms using a new algorithm that reduces false positives.

“We have a CAD system that consistently overcalls findings on mammograms. That is, it has a false positive rate,” saysYang. “I have about 10 years of experience reading mammograms and I didn’t find the CAD system very helpful. One day it dawned on me that this is because the computer system only had one set of films for input.”

Currently, radiologists must manually compare a patient’s most recent mammogram to a previous image to identify changes and abnormalities in the breast. Radiologists can examine upwards of 100 scans a day, making it a labor-intensive task susceptible to fatigue and errors.

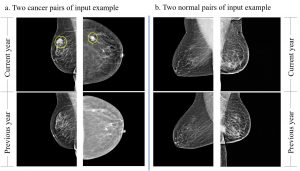

The Feature Fusion Siamese Network system uses AI to compare two sets of films taken at two different time points for diagnosis, eliminating the need for manual comparison, saving time, and reducing the potential for human error.

The system is named for the neural network architecture on which it is based. A Siamese Neural Network consists of two parallel networks that share the same configuration, parameters, and weights. The parallel networks in this case are used to analyze current and previous mammogram scans. Neural networks are a form of artificial intelligence that teaches computers to process data in a way that mimics the human brain.

“I wanted to have a program that had the same input as a radiologist,” Yang says. “Our hope is that this system would function like a radiologist and would leverage the ability of the radiologist to read many more mammograms.”

Yang enlisted Nabavi to create the program. Nabavi and Jun Bai, a Ph.D. student in the Department of Computer Science and Engineering, created the novel approach, which extracts features from the images and compares them to each other. It then uses a distance map calculation between the features and compares the two sets of images.

With scans from two different time points being compared, the system can correctly identify stable findings such as cysts, fibroadenomas, lymph nodes, and some calcifications as benign mammographic findings, Yang says.

When looking at the edge or contour of an abnormality, deep learning is good at extracting and comparing features, explains Nabavi. In one shot, the network can identify if there’s an object in one of the paired images that is not in the other one.

“We generate feature maps from current and past images and compare them, pixel by pixel, to create a local map, and element by element to create a global map,” Nabavi says. “This AI model understands what’s normal and abnormal.”

Yang and Nabavi published their findings in 2022 and are working with Technology Commercialization Services (TCS) at UConn Research to begin testing the patent-pending technology and bring it to market. A lot of testing will be needed before the technology can hit the marketplace, Yang says. Existing AI approaches need modification to be applicable for clinical use, says Nabavi.

“Our first goal is to improve the efficacy of the current CAD system and reduce the burden of this labor intensive activity on radiologists,” says Nabavi.

“If we develop a CAD system producing results in which we can be confident, we can save work, which should make mammograms less expensive and more available to women,” adds Yang. “If we show that it’s accurate we can license and commercialize the system in the mammography tech sphere.”

Michael Invernale, a senior licensing manager at TCS, characterizes the technology as a game-changer.

“Being able to do this sort of comparison quickly and effectively and without a margin of human error is hugely important,” Invernale says. ”This is a way of analyzing mammograms that will enhance a radiologist’s ability to detect whether there is cancer. It’s an AI machine learning tool that places the new image over the patient’s prior image to give you a yes or a no. It’s an added level of confidence and a better more reliable technology with the potential to detect cancer early and save lives.”