Normal

0

false

false

false

EN-US

X-NONE

X-NONE

<w:LatentStyles DefLockedState="false" DefUnhideWhenUsed="true"

DefSemiHidden=”true” DefQFormat=”false” DefPriority=”99″

LatentStyleCount=”267″>

<w:LsdException Locked="false" Priority="0" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”Normal”/>

<w:LsdException Locked="false" Priority="9" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”heading 1″/>

<w:LsdException Locked="false" Priority="10" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”Title”/>

<w:LsdException Locked="false" Priority="11" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”Subtitle”/>

<w:LsdException Locked="false" Priority="22" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”Strong”/>

<w:LsdException Locked="false" Priority="20" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”Emphasis”/>

<w:LsdException Locked="false" Priority="59" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Table Grid”/>

<w:LsdException Locked="false" Priority="1" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”No Spacing”/>

<w:LsdException Locked="false" Priority="60" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Shading”/>

<w:LsdException Locked="false" Priority="61" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light List”/>

<w:LsdException Locked="false" Priority="62" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Grid”/>

<w:LsdException Locked="false" Priority="63" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 1″/>

<w:LsdException Locked="false" Priority="64" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 2″/>

<w:LsdException Locked="false" Priority="65" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 1″/>

<w:LsdException Locked="false" Priority="66" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 2″/>

<w:LsdException Locked="false" Priority="67" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 1″/>

<w:LsdException Locked="false" Priority="68" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 2″/>

<w:LsdException Locked="false" Priority="69" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 3″/>

<w:LsdException Locked="false" Priority="70" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Dark List”/>

<w:LsdException Locked="false" Priority="71" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Shading”/>

<w:LsdException Locked="false" Priority="72" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful List”/>

<w:LsdException Locked="false" Priority="73" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Grid”/>

<w:LsdException Locked="false" Priority="60" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Shading Accent 1″/>

<w:LsdException Locked="false" Priority="61" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light List Accent 1″/>

<w:LsdException Locked="false" Priority="62" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Grid Accent 1″/>

<w:LsdException Locked="false" Priority="63" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 1 Accent 1″/>

<w:LsdException Locked="false" Priority="64" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 2 Accent 1″/>

<w:LsdException Locked="false" Priority="65" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 1 Accent 1″/>

<w:LsdException Locked="false" Priority="34" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”List Paragraph”/>

<w:LsdException Locked="false" Priority="29" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”Quote”/>

<w:LsdException Locked="false" Priority="30" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”Intense Quote”/>

<w:LsdException Locked="false" Priority="66" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 2 Accent 1″/>

<w:LsdException Locked="false" Priority="67" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 1 Accent 1″/>

<w:LsdException Locked="false" Priority="68" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 2 Accent 1″/>

<w:LsdException Locked="false" Priority="69" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 3 Accent 1″/>

<w:LsdException Locked="false" Priority="70" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Dark List Accent 1″/>

<w:LsdException Locked="false" Priority="71" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Shading Accent 1″/>

<w:LsdException Locked="false" Priority="72" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful List Accent 1″/>

<w:LsdException Locked="false" Priority="73" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Grid Accent 1″/>

<w:LsdException Locked="false" Priority="60" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Shading Accent 2″/>

<w:LsdException Locked="false" Priority="61" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light List Accent 2″/>

<w:LsdException Locked="false" Priority="62" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Grid Accent 2″/>

<w:LsdException Locked="false" Priority="63" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 1 Accent 2″/>

<w:LsdException Locked="false" Priority="64" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 2 Accent 2″/>

<w:LsdException Locked="false" Priority="65" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 1 Accent 2″/>

<w:LsdException Locked="false" Priority="66" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 2 Accent 2″/>

<w:LsdException Locked="false" Priority="67" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 1 Accent 2″/>

<w:LsdException Locked="false" Priority="68" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 2 Accent 2″/>

<w:LsdException Locked="false" Priority="69" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 3 Accent 2″/>

<w:LsdException Locked="false" Priority="70" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Dark List Accent 2″/>

<w:LsdException Locked="false" Priority="71" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Shading Accent 2″/>

<w:LsdException Locked="false" Priority="72" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful List Accent 2″/>

<w:LsdException Locked="false" Priority="73" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Grid Accent 2″/>

<w:LsdException Locked="false" Priority="60" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Shading Accent 3″/>

<w:LsdException Locked="false" Priority="61" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light List Accent 3″/>

<w:LsdException Locked="false" Priority="62" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Grid Accent 3″/>

<w:LsdException Locked="false" Priority="63" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 1 Accent 3″/>

<w:LsdException Locked="false" Priority="64" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 2 Accent 3″/>

<w:LsdException Locked="false" Priority="65" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 1 Accent 3″/>

<w:LsdException Locked="false" Priority="66" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 2 Accent 3″/>

<w:LsdException Locked="false" Priority="67" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 1 Accent 3″/>

<w:LsdException Locked="false" Priority="68" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 2 Accent 3″/>

<w:LsdException Locked="false" Priority="69" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 3 Accent 3″/>

<w:LsdException Locked="false" Priority="70" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Dark List Accent 3″/>

<w:LsdException Locked="false" Priority="71" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Shading Accent 3″/>

<w:LsdException Locked="false" Priority="72" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful List Accent 3″/>

<w:LsdException Locked="false" Priority="73" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Grid Accent 3″/>

<w:LsdException Locked="false" Priority="60" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Shading Accent 4″/>

<w:LsdException Locked="false" Priority="61" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light List Accent 4″/>

<w:LsdException Locked="false" Priority="62" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Grid Accent 4″/>

<w:LsdException Locked="false" Priority="63" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 1 Accent 4″/>

<w:LsdException Locked="false" Priority="64" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 2 Accent 4″/>

<w:LsdException Locked="false" Priority="65" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 1 Accent 4″/>

<w:LsdException Locked="false" Priority="66" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 2 Accent 4″/>

<w:LsdException Locked="false" Priority="67" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 1 Accent 4″/>

<w:LsdException Locked="false" Priority="68" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 2 Accent 4″/>

<w:LsdException Locked="false" Priority="69" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 3 Accent 4″/>

<w:LsdException Locked="false" Priority="70" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Dark List Accent 4″/>

<w:LsdException Locked="false" Priority="71" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Shading Accent 4″/>

<w:LsdException Locked="false" Priority="72" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful List Accent 4″/>

<w:LsdException Locked="false" Priority="73" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Grid Accent 4″/>

<w:LsdException Locked="false" Priority="60" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Shading Accent 5″/>

<w:LsdException Locked="false" Priority="61" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light List Accent 5″/>

<w:LsdException Locked="false" Priority="62" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Grid Accent 5″/>

<w:LsdException Locked="false" Priority="63" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 1 Accent 5″/>

<w:LsdException Locked="false" Priority="64" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 2 Accent 5″/>

<w:LsdException Locked="false" Priority="65" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 1 Accent 5″/>

<w:LsdException Locked="false" Priority="66" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 2 Accent 5″/>

<w:LsdException Locked="false" Priority="67" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 1 Accent 5″/>

<w:LsdException Locked="false" Priority="68" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 2 Accent 5″/>

<w:LsdException Locked="false" Priority="69" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 3 Accent 5″/>

<w:LsdException Locked="false" Priority="70" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Dark List Accent 5″/>

<w:LsdException Locked="false" Priority="71" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Shading Accent 5″/>

<w:LsdException Locked="false" Priority="72" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful List Accent 5″/>

<w:LsdException Locked="false" Priority="73" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Grid Accent 5″/>

<w:LsdException Locked="false" Priority="60" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Shading Accent 6″/>

<w:LsdException Locked="false" Priority="61" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light List Accent 6″/>

<w:LsdException Locked="false" Priority="62" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Light Grid Accent 6″/>

<w:LsdException Locked="false" Priority="63" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 1 Accent 6″/>

<w:LsdException Locked="false" Priority="64" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Shading 2 Accent 6″/>

<w:LsdException Locked="false" Priority="65" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 1 Accent 6″/>

<w:LsdException Locked="false" Priority="66" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium List 2 Accent 6″/>

<w:LsdException Locked="false" Priority="67" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 1 Accent 6″/>

<w:LsdException Locked="false" Priority="68" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 2 Accent 6″/>

<w:LsdException Locked="false" Priority="69" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Medium Grid 3 Accent 6″/>

<w:LsdException Locked="false" Priority="70" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Dark List Accent 6″/>

<w:LsdException Locked="false" Priority="71" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Shading Accent 6″/>

<w:LsdException Locked="false" Priority="72" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful List Accent 6″/>

<w:LsdException Locked="false" Priority="73" SemiHidden="false"

UnhideWhenUsed=”false” Name=”Colorful Grid Accent 6″/>

<w:LsdException Locked="false" Priority="19" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”Subtle Emphasis”/>

<w:LsdException Locked="false" Priority="21" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”Intense Emphasis”/>

<w:LsdException Locked="false" Priority="31" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”Subtle Reference”/>

<w:LsdException Locked="false" Priority="32" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”Intense Reference”/>

<w:LsdException Locked="false" Priority="33" SemiHidden="false"

UnhideWhenUsed=”false” QFormat=”true” Name=”Book Title”/>

/* Style Definitions */

table.MsoNormalTable

{mso-style-name:”Table Normal”;

mso-tstyle-rowband-size:0;

mso-tstyle-colband-size:0;

mso-style-noshow:yes;

mso-style-priority:99;

mso-style-parent:””;

mso-padding-alt:0in 5.4pt 0in 5.4pt;

mso-para-margin-top:0in;

mso-para-margin-right:0in;

mso-para-margin-bottom:10.0pt;

mso-para-margin-left:0in;

line-height:115%;

mso-pagination:widow-orphan;

font-size:11.0pt;

font-family:”Calibri”,”sans-serif”;

mso-ascii-font-family:Calibri;

mso-ascii-theme-font:minor-latin;

mso-hansi-font-family:Calibri;

mso-hansi-theme-font:minor-latin;}

The processing and analysis of massive data as part of scientific problem solving require powerful high performance computing as well as enormous high speed storage and memory. In addition, some problems for which the data size is small may also require high performance computing due to processing complexity. At UConn, many researchers employ parallel computational techniques to solve problems, and they are reliant upon UConn’s high performance computing (HPC) capabilities to carry out their work.

Dr. Emmanouil Anagnostou, the Northeast Utilities (NU) Foundation Chair of Environmental Engineering, was an early investor and adopter of UConn’s HPC capabilities because of the nature of his hazardous weather prediction research. A major project funded by NU involves the development of a predictive model that enables the various utilities within NU (Connecticut Light & Power, Western Massachusetts Company and NSTAR) to identify regions of their power distribution network that are particularly vulnerable to storm damage. With Drs. Brian Hartman (Mathematics) and Marina Astitha, he is incorporating vast amounts of historical storm and utility data as well as weather forecasts from the National Oceanic & Atmospheric Administration (NOAA) to predict the number of trouble spots, or unique outage events associated with a storm. Forecasts based on predictive models running on the HPC network help NU ide ntify, in advance of a storm, areas that are more prone to extreme weather damage, allowing them to carry out crew allocation and deployment for more efficient storm restoration and recovery. The immediate nature of this work demands that the researchers have instant access to the HPC network and staffing he requires whenever a major storm approaches the state.

ntify, in advance of a storm, areas that are more prone to extreme weather damage, allowing them to carry out crew allocation and deployment for more efficient storm restoration and recovery. The immediate nature of this work demands that the researchers have instant access to the HPC network and staffing he requires whenever a major storm approaches the state.

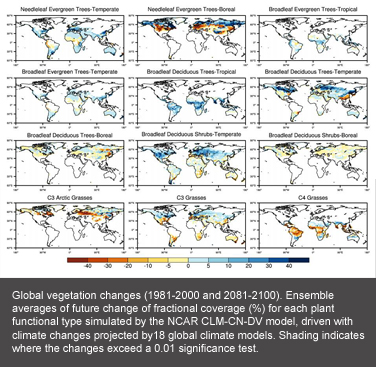

Dr. Guiling Wang (Civil & Environmental Engineering) is leading another highly complex research project, for which she is using a predictive model to investigate how different varieties of vegetation are affected long term by changes in temperature, precipitation, weather extremes and other climatological characteristics. Dr. Wang’s algorithm integrates results from her predictive model driven by data from 18 global climate models. She then compares the predicted vegetation changes for the period 1981-2000 against those for the period 2081-2100 to project future changes in vegetation. She notes that vegetation takes more than 200 years to reach a state of equilibrium with climate; thus, the results in the figure are based on over 8,000 years of model integration.

Dr. Sanguthevar Rajasekaran, the UTC Chair Professor of Computer Science & Engineering, has developed a motif search system (http://mnm.engr.uconn.edu) with NIH funding, which is used by biologists across the globe and relies upon UConn’s HPC capabilities. Motifs are patterns found in biological sequences that are vital for understanding gene function, human disease, drug design, and the like, and they are helpful in finding, for example, transcriptional regulatory elements and transcription factor binding sites. Dr. Rajasekaran relies upon the HPC’s massive parallelism capabilities particularly for his work involving one version of motif search, modeled as the (l, d)-motif search problem, for which the best-known algorithms require exponential time in some parameters.

Over 100 faculty members – in disciplines ranging from engineering to molecular and cellular biology, agriculture and natural resources to economics – across the Storrs campus currently use UConn’s HPC services. These ranks will explode in coming years as UConn fulfills its ambitious faculty hiring initiative and Next Generation Connecticut investment in STEM education.

Over 100 faculty members – in disciplines ranging from engineering to molecular and cellular biology, agriculture and natural resources to economics – across the Storrs campus currently use UConn’s HPC services. These ranks will explode in coming years as UConn fulfills its ambitious faculty hiring initiative and Next Generation Connecticut investment in STEM education.

Growing Demand

UConn’s HPC facilities are operated by the Booth Engineering Center for Advanced Technology (BECAT) under the direction of Dr. Rajasekaran. Engineering Technical Services computer engineer Rohit Mehta and Ed Swindelles, Manager of Advanced Computing for the School of Engineering, have played pivotal roles in establishing, augmenting and stabilizing the center’s resources, and in training and managing the HPC staff.

The School of Engineering has maintained cutting-edge computing resources for more than 40 years, during which it has continually invested in not only equipment but also building/infrastructure and staffing support within BECAT, which was designated a University Center just this fall. Until recently, these resources were sufficient for most faculty needs, but over time, research has become dramatically more data-driven and complex.

To ensure UConn’s HPC capabilities continue to meet the needs of its current and future research customers, UConn Vice President for Research Dr. Jeffrey Seemann recently provided support to purchase additional high speed storage capacity. This investment increased the systems high speed storage by a factor of 10 thereby eliminating a computational bottleneck in the existing system. Perhaps more important, the investment will help UConn to nurture the organic evolution of a cross-disciplinary research culture in high performance computing.

From Silos to Centralization

Prior to 2011, faculty whose work produced or relied upon great volumes of data simply purchased, installed and maintained computer clusters within their laboratories, a practice that was both inefficient and expensive. This practice was, says Swindelles, “An inefficient use of resources. Not only did they have to devote a portion of their start-up or grant money to install, use and maintain their independent clusters, they also had to install the climate-controlled infrastructure and electronics, and rely on computer-savvy graduate students to maintain the networks.”

In 2011, Dr. Mun Y. Choi – former Engineering Dean and now Provost – and Dr. Rajasekaran championed the centralization of high performance computing within a single center to increase economies of scale, encourage collaborative relationships, satisfy environmental and infrastructure requirements, provide much-needed professional staff, and free up valuable faculty funds previously expended in building siloed HPC capabilities in labs across campus.

That early push led to the purchase of a high-end, 104-node HPC cluster, code-named “Hornet.” The Hornet cluster contains both CPU and GPU resources, providing a massive amount of computing power. Over time, as demand for HPC services has exploded campus-wide, UConn Engineering has invested in additional nodes, high speed storage and memory.

UConn’s HPC capabilities currently include 1,408 compute cores (with 4 gigabytes of RAM per core), 250 terabytes of high speed storage, and 32 NVIDIA GPUs. The HPC operation is open 24 hours a day, seven days a week and staffed by computer professionals who maintain the equipment and provide customer service. Mehta ensures the cluster is operational through continuous additions of equipment and increasing demands. He also trains the BECAT student staff and provides introductory workshops for graduate student and faculty users, and he is central to the day-to-day operations of the center.

The HPC services are available to all faculty and graduate researchers, and full details are provided on the Hornet cluster Wiki page. After completing an online account application, researchers simply log in via an SSH and submit their job request via a scheduler. Those who choose to invest in the HPC capabilities enjoy priority access under a three-year “condo model,” according to Swindelles. New faculty members whose research is computationally-heavy are given the option to commit a portion of their start-up packages toward the purchase of nodes; in exchange, they receive three years of priority status. Researchers may also choose to use the facility’s resources on a pay-as-you-go hourly, first come/first served queue basis.